- TCPConnection : 基于之前实现的 TCPReciver和TCPSender ; 作为完整的一端的TCP实现

- 功能以及对应核心函数如下

- 接收segment : segment_received

- 本端接收seg 并根据自身receiver以及sender状态 发送相应seg给peer

- 注意在segment_receive中 会自动调用send_segments()

- 发送segment : send_segment. (会捎带ack)

- pass时间 : tick. 可能触发重传

- shutdown关闭连接

- unclean_shutdown : 清空要发送的seg , 根据是否主动异常,发送rst , 设置_sender和receiver状态error , active_ = false

- active unclean_shutdown : send rst

- passive unclean shut_down : not send rst

- clean_shutdown : active = false

- active clean_shutdown : 在tick时 判断是否经历完TIME_WAIT, 经历完,则clean_shutdown

- passive clean_shutdown : 在segment_recieve接收完FIN的ACK后发现进入CLOSED状态,则clean_shutdown

- unclean_shutdown : 清空要发送的seg , 根据是否主动异常,发送rst , 设置_sender和receiver状态error , active_ = false

- 接收segment : segment_received

- 功能以及对应核心函数如下

- 最核心的一点 : 如何确定结束连接. When TCPConnection is Done

- unclean shutdown : 接收或发送rst就立刻结束连接

- clean shutdown

- 需要满足#1#2#3#4

- 只有一端同时满足四个条件,该端才能认为TCPConnection is done. 结束连接.

- 对于#4有两种情况可以满足

- active close : A. 无法确定100%满足,只能通过TIME_WAIT接近100%

- 这也就是由于两军问题,不可能去保证两端(主动关闭的无法一定达成clean shutdown)都能达成clean shutdown,但是TCP已经尽可能地接近了。

- passive close : B. 100%满足

- 被动关闭的一端无需linger time,可以100%达成clean_shutdown

- active close : A. 无法确定100%满足,只能通过TIME_WAIT接近100%

TCPConnection

问题

为什么满足#1234 本端就能connection is donw ?

因为首先明确clean_shutdown的目标是 双方都完全的可靠的接收到了对方的outbound stream.

其次这四个条件含义分别如下

- .#2是为了保证local的outbound_stream全部字节都已经被发送出去(其实按照我们所实现的,满足了#3就一定满足#2)(TCPSender.state = FIN_SENT)

- .#3是为了保证local知道,local的outbound stream全部被peer接收(TCPSender.state = FIN_ACKED)

- .#1是为了保证peer的outbound stream全部被local接收(TCPReceiver.state = FIN_RECV)

- .#4是为了保证peer知道,其outbound stream 已经全部被local接收,不然根据重传机制,peer会不断重传outbound stream字节或如fin segment(remote TCPSender.state = FIN_ACKED)

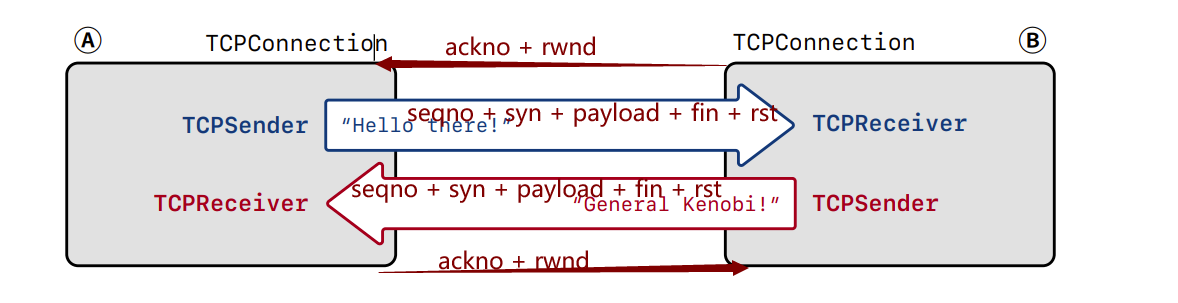

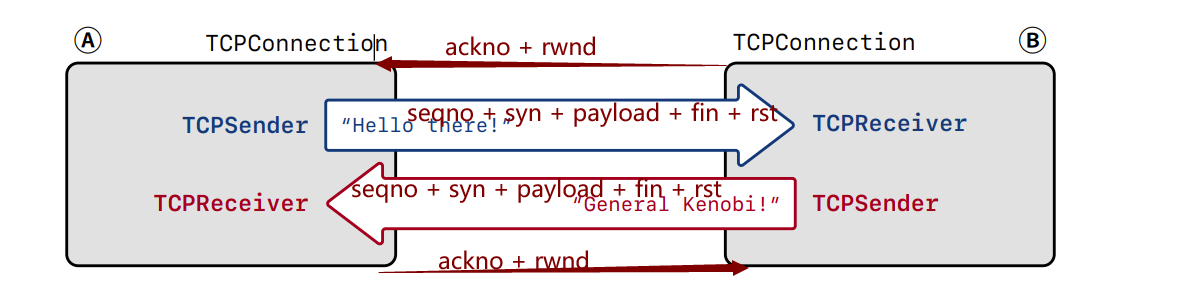

为什么说TCP的字节流是双向的 ?

- 因为TCP有两条单向的字节流

- 每一端都有TCPSender 和 TCPReceiver

- 从A到B的字节流 是 由 TCPSender A 向 TCPReceiver B 发起的 , 并由TCPSender A 和 TCPReceiver B维护.

- TCPSender A 给 TCPReceiver B发送的是 outbound_stream A的payload以及影响该连接的syn 和 fin。这三者都会占据seq空间. 这是sender A向 receiver B发送数据

- TCPReceiver A给TCPSender B发送 ackno 以及 rwnd. 是receiver A向sender B反应接收情况.

- TCPReceiver B给TCPSender A发送 ackno 以及 rwnd. 是receiver B向sender A反应接收情况.

- TCPSender B 给 TCPReceiver A发送的是 outbound_stream B的payload以及影响该连接的syn 和 fin。这三者都会占据seq空间. 这是sender B向 receiver A发送数据

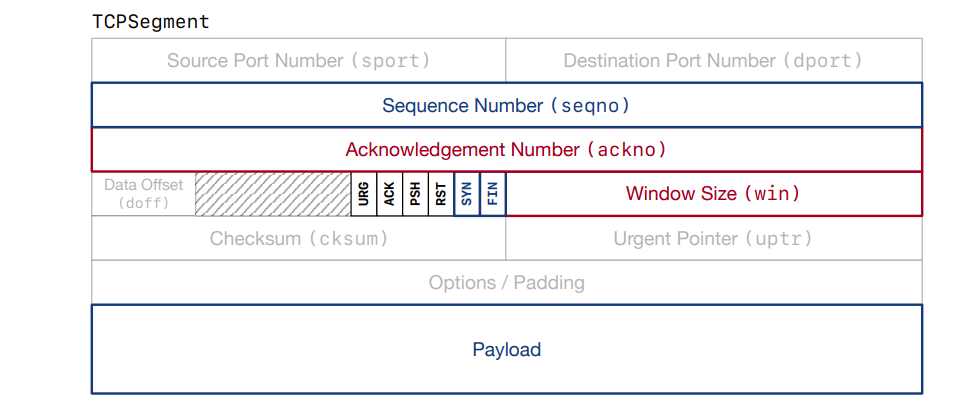

为什么(A发送的)ack不占据(A发送的)seqno空间 ? 也即为什么 empty ack segment的大小为0 ?

- 约定下A的seqno空间 : TCPSender A 发起 并 维护的 seqno空间. TCPReceiver B依据该seqno空间发送ackno报文向B进行确认(当然报文中的rwnd是依据TCPReceiver B自己的接收窗口空间)

- 一言以蔽之:A发送给B的ack,并不是A要发送给B的数据,而仅仅是A为了使得B正常工作,所发送的交互辅助信息. 只有A要发送给B的数据(outbound_stream(payload))才会占据A的seqno空间,除此之外还有syn和fin(因为他们会对A到B的连接产生影响)

- 复杂来说如下(已知每一端都可分为Receiver和Sender)

- A端发送给B端的segment , 其中的seqno , 是A发送给B的字节 在 A的空间的下标.

- 只有TCPSender A 发送给 TCPReceiver B 的 报文内容才会占据seqno空间(syn + payload(outbound_stream) + fin)

- 而TCPReceiver A发送给TCPSender B的ackno 并不会占据TCPSender A的seqno空间

- 因为这是TCPReceiver A发送给TCPSender B的,ackno并不是来自TCPSender。况且ackno本身也并不是数据,而是一个辅助TCPSender B进行工作的辅助信息.

所有报文都有ack吗?所有报文都有seqno吗?

- 任何报文都有seqno,发送的任何segment都需要有一个seqno,即便该segment本身可能并不占据/消耗seqno空间。

- 几乎所有报文都有ackno 以及 rwnd. (除了client发送给sender的第一个syn 报文)

- 如A给B发送了一个empty ack segment with seqno x, 那么A给B发送的下一个segment的seqno仍然是x

How does the application read from the inbound stream?

- TCPConnection::inbound_stream() is implemented in the header file already.

- 关于rst

- This flag (“reset”) means instant death to the connection

- 发送和接收到rst segment的后果是一样的:

- unclean shutdown :

- 将outbound_stream和outbound_stream置为error

- 销毁连接 active = false

- unclean shutdown :

- 什么时候发送rst segment ?

- 重传次数过多

- 当active = true时 调用了TCPConnection的析构函数

There are two situations where you’ll want to abort the entire connection:

- If the sender has sent too many consecutive retransmissions without success (more than TCPConfig::MAX RETX ATTEMPTS, i.e., 8).

- If the TCPConnection destructor is called while the connection is still active (active() returns true).

- rst不占据seqno空间

背景

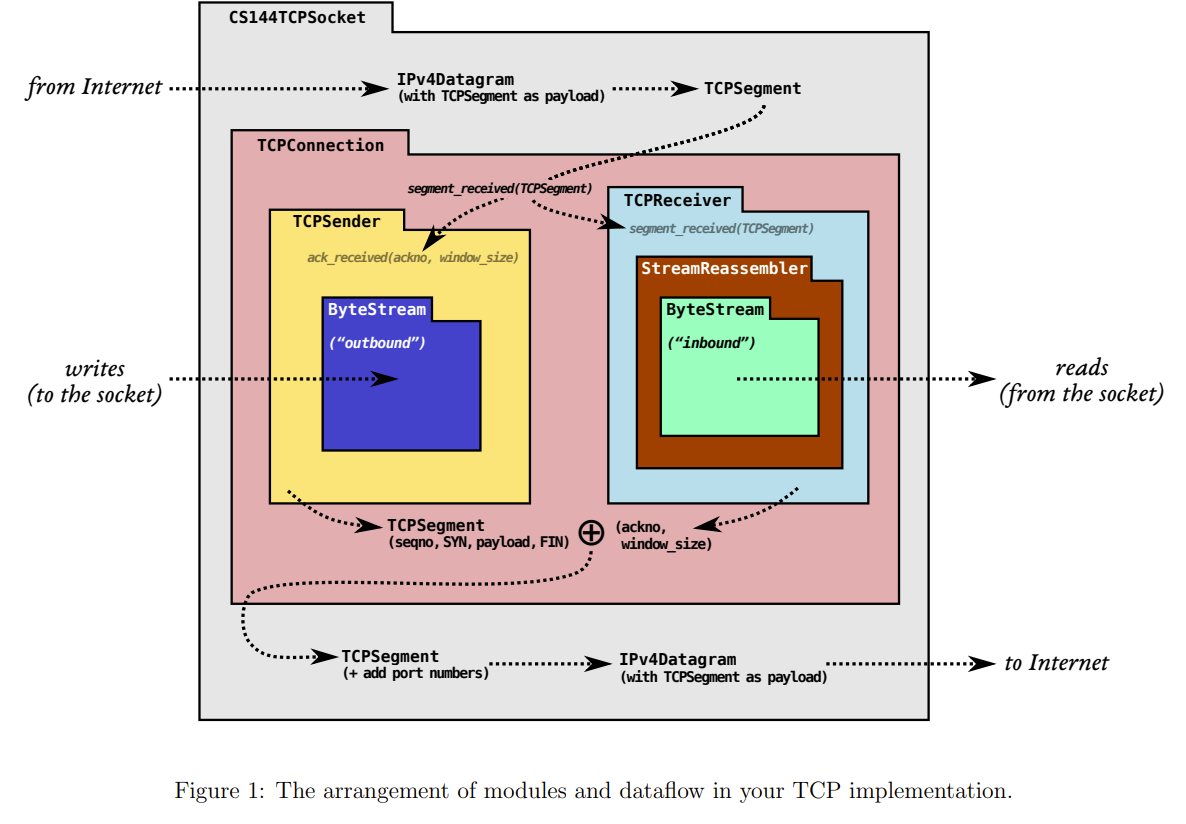

- You have reached the summit.

- lab0 ,我们实现了一个 flow-controlled(流量控制) 的 bytestream

- lab123 , 我们实现了一个 in-both-direction(双向的) 在bytestream和网络提供的unreliable datagrams之间进行通信的 工具(TCPSender和TCPReceiver)

- lab4 , now , 我们将实现一个称为TCPConnection的整体模块 , 整合我们的TCPSender 和 TCPReceiver ,并处理connection的global housekeeping.

- connection的TCPSegment 可以被封装进 uesr UDP segment(TCP-in-UDP) 或者 Internet IP datagrams(TCP/IP) -> 使得你的代码可以和Internet上同样使用TCP/IP的主机进行交互.

- 需要注意的是 , TCPConnection只是将TCPSender和TCPReceiver进行组合. TCPConnection本身的实现只需要100行代码. TCPConnection依赖于TCPSender和TCPReceiver的鲁棒性

- Lab4 : the TCP Connection

- 本周 实现一个可工作的TCP, 我们已将做了大部分的工作 : 已经实现了TCPSender 和 TCPReceiver. lab4的工作是将他们组合在一起,组成一个class TCPConnection , 处理一些对于connection来说全局的事务

- class TCPConnection就是一个peer. 负责接收和发送segment. 确保TCPSender和TCPReceiver 都可以在收到和发送的segment上 添写和读取相应的字段.

- 回忆 :

- TCP reliably conveys a pair of flow-controlled byte streams, one in each direction

- TCP可靠的 传输一对双向的流量控制的字节流.

- Two parties participate in the TCP connection, and each party acts as both “sender” (of its own outbound byte-stream) and “receiver” (of an inbound byte-stream) at the same time

- TCPConnection的两端,每一端都同时扮演者sender和receiver的角色.

- TCP reliably conveys a pair of flow-controlled byte streams, one in each direction

- AB两方被称为connection的endpoint / peer.

Here are the basic rules the TCPConnection has to follow

约定一下 将本端称为local … , 将对端称为 peer …

功能

Receiving segments

- TCPConnection收到segment : segment_received() called

In this method : - 对于rst segment

- 如果local收到的segment 有 rst flag , 那么将

- set inbound_stream and outbound_stream as error state ,

- 并且不发送任何报文回复给peer ,

- 并立刻杀死本连接.

- 如果local收到的segment 有 rst flag , 那么将

- 对于非rst segment

- 报文发送给 TCPReceiver : TCPReceiver接收segment中的seqno , syn , payload , fin

- 如果ack flag , segment 告知 TCPSender 接收 ackno + window_size

- 只要收到的segment占据了peer的seqno空间, 那么TCPConnection(本端的TCPReceiver)需要至少回复一个segment,来告知对端(的TCPSender) ackno 以及 window_size

1

2if (seg.length_in_sequence_space() > 0) // receiver recv syn , payload , fin

ack_to_send = true;

- special case:

- TCP : Keepalive保活机制. 我们只实现回复keep-alive segment,不实现发送keep-alive segment

- 对端会发送segment with invalid seqno 去检验本端的TCP是否还活着.

- 本端的TCPConnection需要回复该segment , 即便该segment不消耗任何 seqno.

- 如下

1

2

3if (_receiver.ackno().has_value() and (seg.length_in_sequence_space() == 0) and seg.header().seqno == _receiver.ackno().value() - 1) { // local TCP expect the seqno to be _receiver.ackno().value()

_sender.send_empty_segment();

} - 从这里也可以看出,keep-alive segment只是检验对端的TCP层是否存活,而不是检验对端的应用层是否正常工作. 因为该keep-alive报文根本不会被送到应用层程序.

- TCP : Keepalive保活机制. 我们只实现回复keep-alive segment,不实现发送keep-alive segment

Sending segments.

- The TCPConnection will send TCPSegments over the Internet:

- TCPConnection将要发送的报文 其字段由两部分组成: TCPSender添写 + TCPReceiver添写

- TCPSender负责 seqno + syn + payload + fin + rst

- TCPReceiver负责ackno 以及receive window_size , 如果存在ackno 则 将ackno填入将要发送的segment(应该只有client主动发起连接的那一条syn segment中没有ackno)

- How does the TCPConnection actually send a segment?

- Similar to the TCPSender—push it on to the segments out queue. As far as your TCPConnection is concerned, consider it sent as soon as you push it on to this queue.

- Soon the owner will come along and pop it (using the public segments_out() accessor method) and really send it.

When time passes.

- TCPConnection通过调用tick()代表时间流逝

- TCPConnection::tick():

- tell the TCPSender about the passage of time : TCPSender::tick()

- 当重传次数过多, 放弃该连接 , 并发送an empty segment with the rst flag set给对端

- end the connection cleanly if necessary

- necessary : 满足#1234,TIME_WAIT阶段结束

Decide when connection is done

One important function of the TCPConnection is to decide when the TCP connection is fully “done.”

以下就是关于tcp何时结束的讨论

The end of a TCP connection: consensus takes work

connection is down 的方式

when tcpconnection is down

- 释放其对本地端口号的独占声明

- 停止发送ack作为回复

- 将连接视为历史记录

- active() return false

connection有两种结束的方式

Unclean shutdown

- 情况: 本端发送或者收到一个rst segment

- 关于rst segment : 接收和发送的作用都是一样的:导致unclean shutdown

- 动作:

- outbound and inbound stream 置为 state error

- active() return false from now on immediately

- 情况: 本端发送或者收到一个rst segment

clean shutdown

- the connection get to “done” without error

- clean shutdown更复杂但是更优美,因为他尽可能地保证了双方的outbound bytestream被可靠的完全的交付给peer receiver.

如何尽可能达成clean_shutdown

- 由于Two Generals Problem两军问题,不可能去保证两端都能达成clean shutdown,但是TCP已经尽可能地接近了。下面从一端的角度来看tcp如何做到这点

- 若要在一条连接中 和 remote peer 达成clean shutdown,需要先满足四个先决条件

- Prereq #1 The inbound_stream has been fully assembled and has ended.

- TCPReceiver的inbound_stream已经顺序的存了所有字节,且 已经存了最后的那个fin.(TCPReciever receive fin —> inbound_stream.end_input())

- 即 TCPReceiver.state = FIN_RECV

- Prereq #2 The outbound_stream has been ended by the local application and fully sent (including the fact that it ended, i.e. a segment with fin ) to the remote peer.

- local outbound_stream 已经关闭(上层app调用了outbound.end_input()),且outbound_stream的全部字节都已经发送,且TCPSender 已经发送了最后的fin segment.

- 即TCPSender.state = FIN_SENT

- Prereq #3 The outbound stream has been fully acknowledged by the remote peer.

- local outbound_stream 已经全部被remote peer 所确认.

- 即 local收到了remote peer 为fin返回的一个ack。因为这代表local发送的fin及之前的全部都被确认;且fin代表这就是发送的全部字节。

- 即TCPSender.state = FIN_ACKED

- 易知若达成#3 则#2必然已经达成

- local outbound_stream 已经全部被remote peer 所确认.

- Prereq #4 The local TCPConnection is confident that the remote peer can satisfy prerequisite #3. This is the brain-bending part. There are two alternative ways this can happen:

- 即local确认 remote peer满足TCPSender.state = FIN_ACKED

- 即local确认 remote peer 接收到了自己回复fin的ack

- 这有点绕,有两种方式满足#4

- option A : local 几乎可以认为 peer满足#3,但并不肯定。也即local几乎满足#4

- option B : local 可以百分之分确定 peer满足#3,即local肯定满足#4

- Prereq #1 The inbound_stream has been fully assembled and has ended.

- 为什么满足了1234,local就可以销毁了?local就可以完全断开连接了?(关闭Sender和Receiver,不再与外界交互)

- 因为首先明确clean_shutdown的目标是 双方都完全的可靠的接收到了对方的outbound stream.

- 其次这四个条件含义分别如下

- .#2是为了保证local的outbound_stream全部字节都已经被发送出去(其实按照我们所实现的,满足了#3就一定满足#2)(TCPSender.state = FIN_SENT)

- .#3是为了保证local知道,local的outbound stream全部被peer接收(TCPSender.state = FIN_ACKED)

- .#1是为了保证peer的outbound stream全部被local接收(TCPReceiver.state = FIN_RECV)

- .#4是为了保证peer知道,其outbound stream 已经全部被local接收,不然根据重传机制,peer会不断重传outbound stream字节或如fin segment(remote TCPSender.state = FIN_ACKED)

条件4如何达成

Option A

Option A: lingering after both streams end.

这种情况发生在local主动发起关闭,lingering 即 close前的最后一个状态的 TIME_WAIT。

等待linger time是为了local尽可能地满足#4

local满足#1 和 #3 ; 且peer似乎满足#3。(即local似乎满足#4.)

- local并不能准确的得知peer满足#3,因为TCP不可靠的传输ack(TCP不会为ack回复ack),local发给对方的ack可能丢失.

local可以通过很自信的认为remote peer满足#3

- local通过等待一段时间(linger time),且这段时间内remote peer没有重传任何报文

linger time = 10 * initial _retransmission_timeout; 在生产环境中 = 2 MSL(60~120s)

- 具体的,当本端满足#1和#3,且距离上次收到ack已经至少过去了10倍的initial _retransmission_timeout 时 , the connection is done . 这种双方stream结束之后的等待 称为 lingering : 它的目的是确保远端不会尝试重传 [本端需要回复ack的] 报文.

- 这也就意味着local class TCPConnection需要再存活一会,仍旧占据着端口,并为收到的segment回复ack.即使本端的inbound,outbound stream已经关闭,并且TCPSender,TCPReceiver都已经正确的完成了所负责的工作。我的实现也做到了这一点

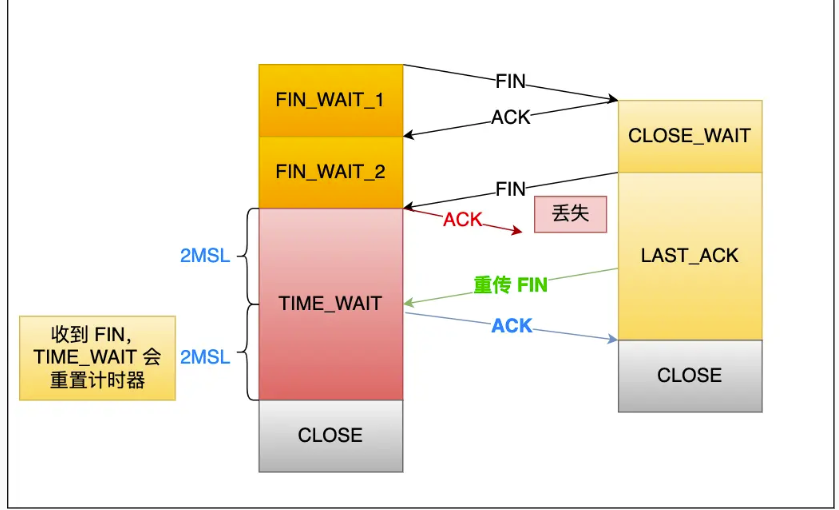

TIME_WAIT作用例子如下

在TCP的生产环境中,linger timer (also known as the time-wait timer or twice the Maximum Segment Lifetime (MSL)) 一般来说是60~120秒。实际上这是一段相当长的时间占据着端口,没有人愿意等这么久,尤其是当你还想用这个端口号去启动本地的另一个服务的时候。Socket编程里,有一个选项是SO_REUSEADDR,可以让Linux忽视端口的占用,强制把一个Socket服务绑定到正在被使用中的端口号上。但是即便有lingering,也不能保证peer一定满足#3,因为peer重传的FIN可能在linger time之后才到达local 或者 根本就是丢失了. 故可能会造成peer的class tcpconnection一直存在,一直重传fin segment。即便此时local的outbound stream 和 inbound stream已经关闭;peer的outbound stream 和 inbound stream都已经关闭.

- 综上,active close 时 , 若满足#1#3,则local不一定满足了#4,需通过linger time来尽可能地满足#4

Option B

Option B: passive close.

发生在local被动关闭连接,直接进入CLOSED状态,而不必进入TIME_WAIT

满足 #1和#3,且local100%确认remote peer满足#3.(既local确定renmote收到了local的所发送的fin ack)

- 怎么做到100%确认的?由上所述TCP也不ack ack.

- 因为在这种passive close的情况下,remote peer首先关闭了其outbound_stream。

OptionB rule是如何工作的 ? 为什么passive close的时候就可以100%确定remote peer满足#4 ?

- 这有一点烧脑,不过可以更深入的理解 两军问题 以及 在不可靠的网络上提供可靠服务的固有限制

- it’s fun to think about and gets to the deep reasons for the Two Generals Problem and the inherent constraints on reliability across an unreliable network.

- 原因如下:

- local在收到了remote peer的fin之后(#1),发送ack报文(至少要发送FIN segment with ack and fin以满足 #2),易知该local发送的fin报文ack的是remote peer’s fin.

- 已知local满足#3 , 那么remote peer 一定已经ack了该local发送的fin+ack报文, 意味着remote peer已经看到了local peer发送的fin报文(上的ackno), 该ackno ack了remote之前发送的fin。那么,就可以保证 remote peer一定已经满足#3了。

- 这有一点烧脑,不过可以更深入的理解 两军问题 以及 在不可靠的网络上提供可靠服务的固有限制

综上,passive close 时 , 若满足#1#3,则local一定满足了#4,无需通过linger time来尽可能地满足#4

- The bottom line is that if the TCPConnection’s inbound stream ends before the TCPConnection has ever sent a fin segment(local outbound_stream_end), (即如果local被动关闭连接)then the TCPConnection doesn’t need to linger after both streams finish

The end of a TCP connection (practical summary)

指导书给出的实践指导

我们可以根据local的state,来判断是active close(optionA) 还是 passive close(optionB)

class TCPConnection 有一个变量linger_after_streams_finish 代表本端是否需要等待linger time再关闭连接

- 一开始是true

- 如果inbound_stream在outbound_stream eof之前被对端end,那么置为false. (即如果是被动关闭连接)

在任何时候 #1 和 #3 被满足,

- a. 如果linger_after_streams_finish = false ,则 connection is done(active() should return false)

- b. 如果linger_after_streams_finish = true , 则 本端等待足够长的时间(尽可能的满足#4),然后connection is done(active() should return false)

约定 TCPConnection的调用者称为owner

约定 A主动关闭连接 :A先断开 A到B的字节流

约定 A主动被动连接 :A后断开 A到B的字节流

实现

重要成员

- TCPConnection如何发送segment:

- std::queue

_segments_out{} ; - void TCPConnection::send_segments()

- 将TCPSender的segment移动到TCPConnection的segment_queue中

- 而后owner会将该segment_queue发送出去

- 注意会捎带ack

- std::queue

关于_linger_after_streams_finish

- 只有在clean_shutdown的情况下 , _linger_after_streams_finish才有意义

- unclean_shutdown 时 , _linger_after_streams_finish无意义。因为unclean_shutdown 不在乎bytestream有没有 正确的完全的 递送给接收方

- _linger_after_streams_finish含义 : 本端在满足#1 #3之后 是否需要再等待一段时间以满足 #4

- 也即,断开连接时是进入TIME_WAIT状态,还是直接进入CLOSED

- bool _linger_after_streams_finish{true};

- 在TCPConnection刚构造时初始化为true , 意味着初始时认为本端在满足#1#2#3之后,必须等待linger time才能满足#4,达成connection is down条件

- 宏观来看只有主动关闭连接时才有_linger_after_streams_finish = true

- _linger_after_streams_finish = false

- 本端在满足#1#2#3之后,#4自动满足,不必等待linger time

- 如果本端的inbound_stream 在本端的outbound_stream发送fin segemnt之前就end了,那么 本端是不必在双端stream finish后,等待linger time再关闭连接。

- 宏观来看只有被动关闭连接时才有_linger_after_streams_finish = false

1

2

3if (_receiver.state() == TCPReceiver::State::FIN_RECV &&

(_sender.state() == TCPSender::State::SYN_ACKED_2 || _sender.state() == TCPSender::State::SYN_ACKED_1))

_linger_after_streams_finish = false;

- 使用: 由后文易知,在满足#1#2#3#4时,本端tcpconnection is done

- 被动关闭连接

- CLOSE_WAIT -> [LAST ACK -> CLOSED]

- 满足#123 , 且自动满足#4(!_linger_after_streams_finish), 进入closed

1

2

3

4

5

6

7

8

9

10

11

12

13void TCPConnection::segment_received(const TCPSegment &seg) :

// clean shutdown 之 本端 主动被动连接 进入 CLOSED

if (_receiver.state() == TCPReceiver::State::FIN_RECV && _sender.state() == TCPSender::State::FIN_ACKED &&

!_linger_after_streams_finish) {

clean_shutdown();

return;

}

// // clean shutdown 之 本端 主动关闭连接 进入 TIME_WAIT

// if (_receiver.state() == TCPReceiver::State::FIN_RECV && _sender.state() == TCPSender::State::FIN_ACKED &&

// _linger_after_streams_finish) {

// // TIME_WAIT !

// // 在tick时检验是否需要断开连接

// } - 主动关闭连接

- FIN_WAIT1 -> [FIN_WAIT2 -> TIME_WAIT -> CLOSED]

- 满足#123,且需要等待linger time才能满足#4

1

2

3

4

5

6void TCPConnection::tick(const size_t ms_since_last_tick) :

if (_receiver.state() == TCPReceiver::State::FIN_RECV && _sender.state() == TCPSender::State::FIN_ACKED &&

_linger_after_streams_finish) {

if (_time_since_last_segment_received >= 10 * _cfg.rt_timeout)

clean_shutdown();

}

反应本端tcp是否仍然存活

- bool _active{true};

- 什么时候为true ?

- 一开始就是true

- 什么时候为false ?

- clean shutdown 和 unclean shutdown

size_t _time_since_last_segment_received{0};

- 距离收到上个sgement过去了多长时间,用于TIME_WAIT

clean shutdown

- each of the two ByteStreams has been reliably delivered completely to the receiving peer

- more complicated but beautiful

- 尽可能地保证两个outbound_stream 都被完全的可靠的 传递给receiver_peer

- clean_shutdown()

1

2

3void TCPConnection::clean_shutdown() {

_active = false;

}

- each of the two ByteStreams has been reliably delivered completely to the receiving peer

unclean shutdown

- tcpconnection 接收或者发送 rst segment

- 动作

- outbound and inbound stream 置为 state error

- active() can return false immediately.

- unclean shutdown()

- 发送rst segment,主动: true ;

- 接收rst segment,被动: false

- 已知在发送rst segment之前,segments_out()可能会有未发送的报文,将他们清空。(未找到理论依据,不过test让我们这么干)

1

2

3

4

5

6

7

8

9

10

11

12

13void TCPConnection::unclean_shutdown(bool rst_to_send /* = false */) {

queue<TCPSegment> empty;

_sender.segments_out().swap(empty);

if (rst_to_send) {

_sender.send_empty_segment(true);

this->send_segments();

}

_sender.stream_in().set_error();

_receiver.stream_out().set_error();

_active = false;

_linger_after_streams_finish = false;

}

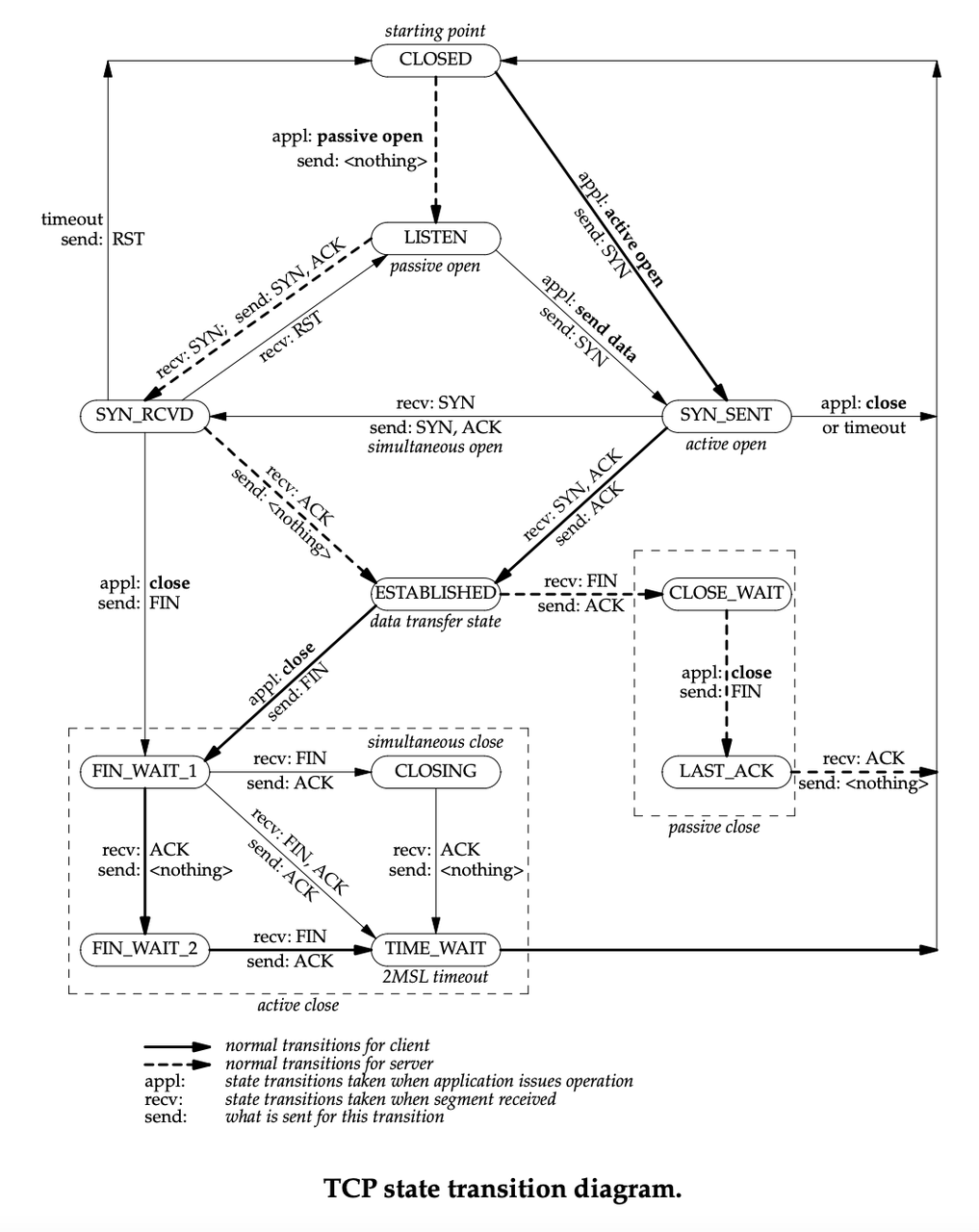

TCP State Transitions

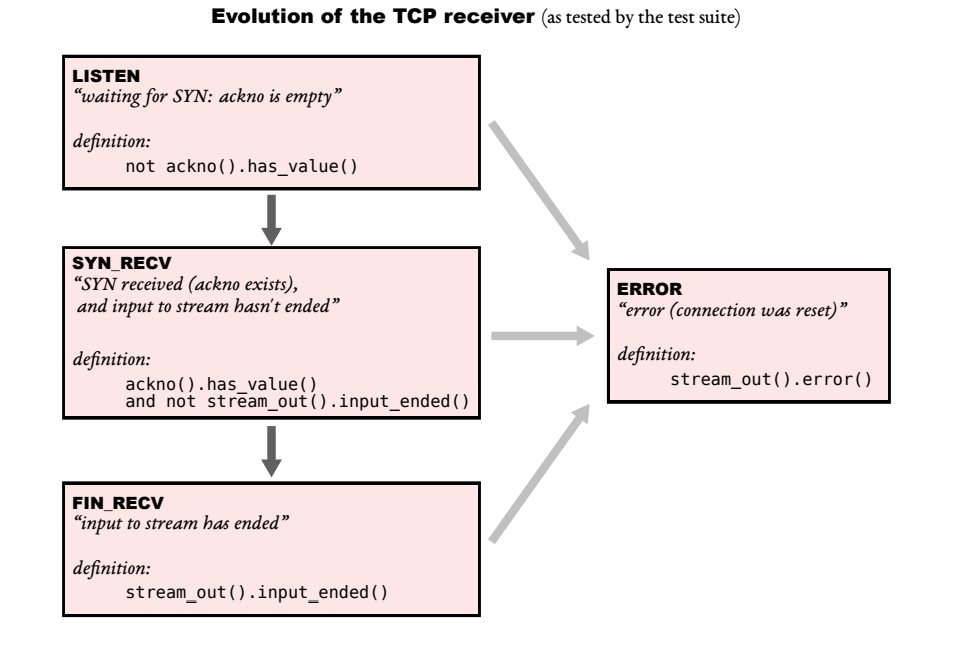

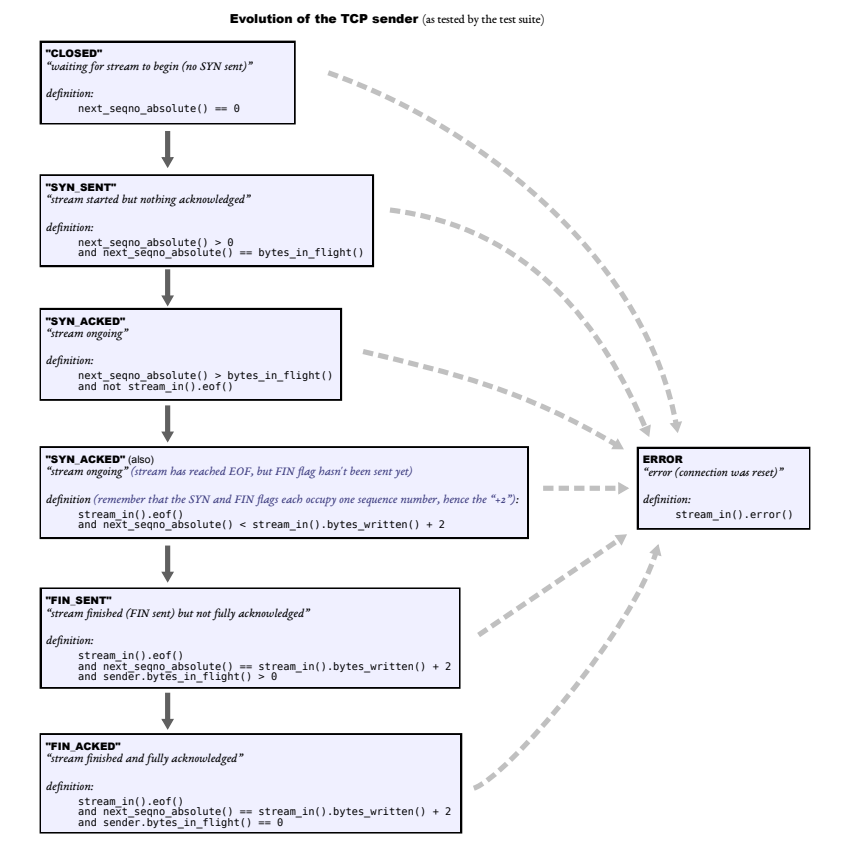

tcpconnection状态

- 我的实现中,虽然指导书说不要求在Sender和Receiver维护状态,不过为了更清晰,我还是为Sender和Reciever依据lab123所述分别维护了状态。

- Receiver

- Sender

- Receiver

- TCPConnection和TCPSender、TCPReciever的状态关系如下表

- 背景:1. client 主动发起连接 到 server,2. client主动断开连接.

- server状态表如下

TCPConnection State TCPReceiver State TCPSender State linger_after_stream_finish active 1. (被动发起连接)LISTEN LISTEN CLOSED true true SYN_RCVD SYN_RECV(recv syn) SYN_SENT(send syn) true true ESTABLISHED SYN_RECV SYN_ACKED(recv ack) true true 2. (被动关闭连接)CLOSE_WAIT FIN_RECV(recv fin) SYN_ACKED false true LAST_ACK FIN_RECV FIN_SENT(fin send) false true CLOSED FIN_RECV FIN_ACKED(recv ack) false false - client状态表如下

TCPConnection State TCPReceiver State TCPSender State linger_after_stream_finish active 1. (主动发起连接)LISTEN LISTEN CLOSED true true SYN_SENT LISTEN SYN_SENT true true ESTABLISHED SYN_RECV(recv syn) SYN_ACKED(recv ack) true true 2. (主动关闭连接)FIN_WAIT1 SYN_RECV FIN_SENT(send syn) true true FIN_WAIT2 SYN_RECV FIN_ACKED(recv ack) true true TIME_WAIT FIN_RECV FIN_ACKED true true CLOSED FIN_RECV FIN_ACKED false false

主动关闭连接可能会经历的四种状态

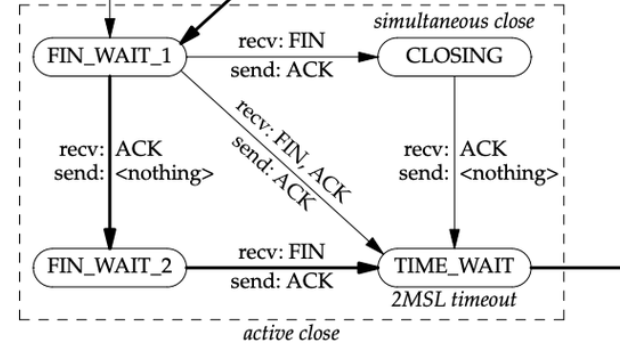

关于连接关闭时的state FIN_WAIT1 , FIN_WAIT2 , CLOSING , CLOSED 关系。

FIN_WAIT1 -> FIN_WAIT2 -> TIME_WAIT -> CLOSED

- 是常规的client主动关闭连接。如上client表所述

FIN_WAIT1 -> CLOSING -> TIME_WAIT -> CLOSED

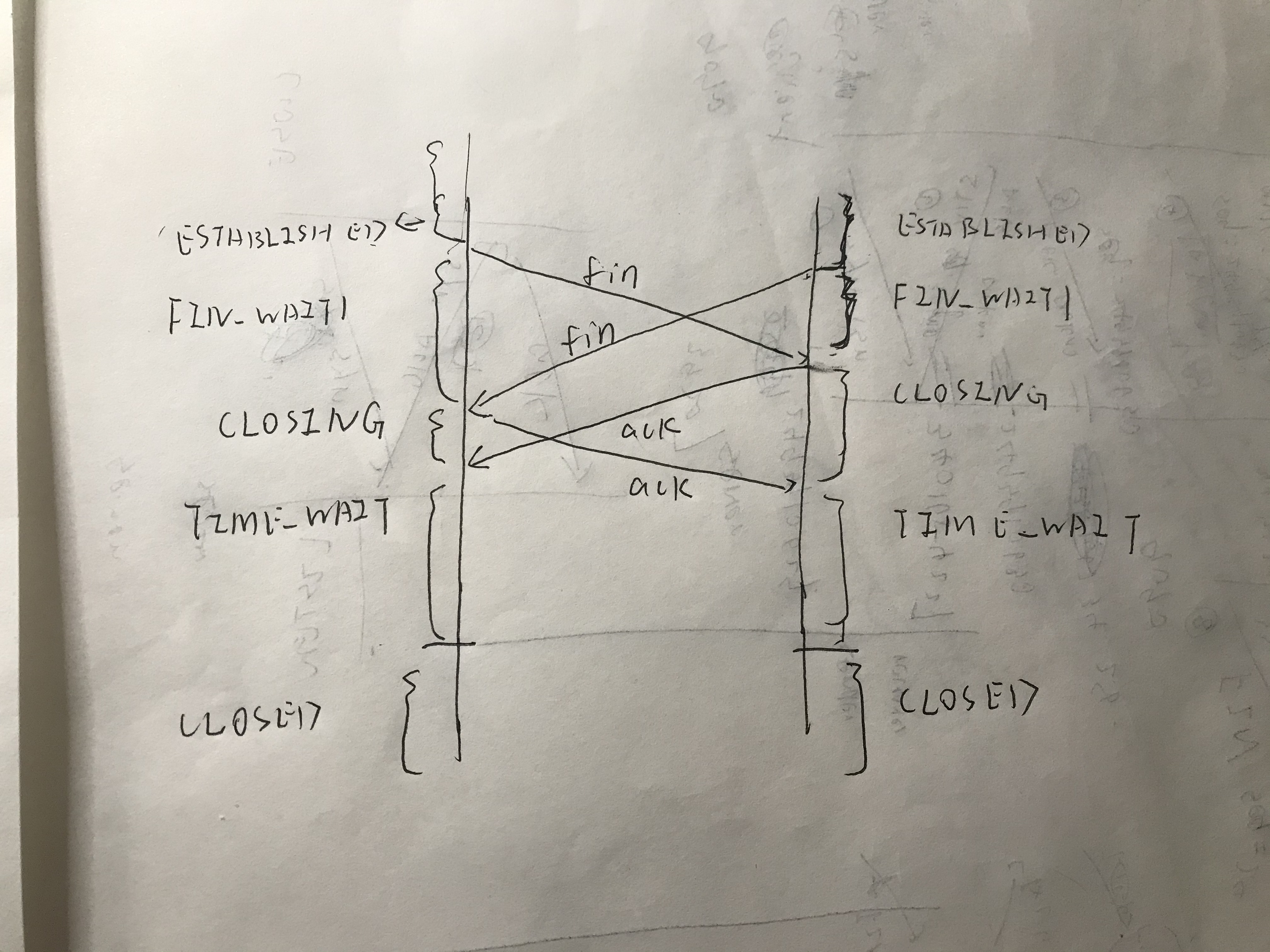

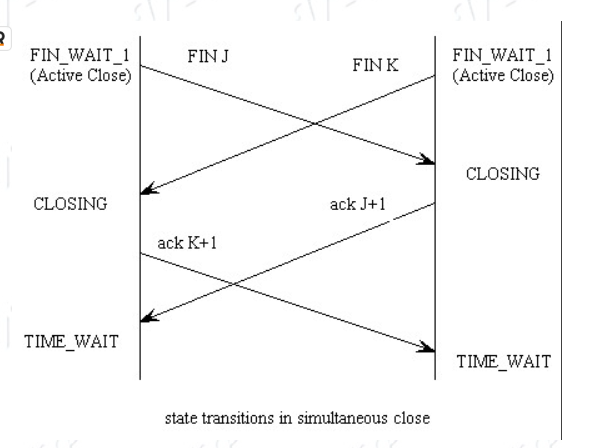

- 为什么会有 CLOSING ?

- 双方同时主动的关闭连接,也即都主动的发送fin segment

- 使得local 在收到local发送的fin的ack之前,先收到了remote peer发送的fin

- 好比说 client主动关闭连接,发送fin;同时server也主动关闭连接,发送fin

- 下图为client表,server表和client表一模一样。

- 为什么需要有time_wait:当从closing -> 变成 fin_recv + fin_acked的时候,已经满足#123,但无法满足#4,因为是主动发送fin,无法保证对方收到了fin的ack(被动发送fin的话,对方fin的ack会随着我方的fin被发送过去,而对方返回的对我们的fin的ack,就代表了对方收到了fin ack)

TCPConnection State TCPReceiver State TCPSender State linger_after_stream_finish active (主动关闭连接)FIN_WAIT1 SYN_RECV FIN_SENT(send syn) true true CLOSING FIN_RECV(recv fin) FIN_SENT true true TIME_WAIT FIN_RECV FIN_ACKED true true CLOSED FIN_RECV FIN_ACKED false false

- 为什么需要有time_wait:当从closing -> 变成 fin_recv + fin_acked的时候,已经满足#123,但无法满足#4,因为是主动发送fin,无法保证对方收到了fin的ack(被动发送fin的话,对方fin的ack会随着我方的fin被发送过去,而对方返回的对我们的fin的ack,就代表了对方收到了fin ack)

- 下图为client表,server表和client表一模一样。

- 双方同时主动的关闭连接,也即都主动的发送fin segment

- 为什么会有 CLOSING ?

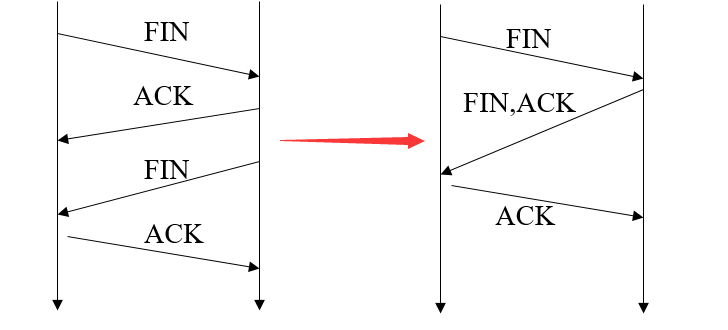

- FIN_WAIT1 -> TIME_WAIT

- peer的fin和ack for local fin在同一个segment里一起发送过来

- 当被动关闭方(上图的服务端)在 TCP 挥手过程中,「没有数据要发送」并且「开启了 TCP 延迟确认机制」,那么第二和第三次挥手就会合并传输,这样就出现了三次挥手。

- TCP 延迟确认机制:为尽量减少发送空的ack segment

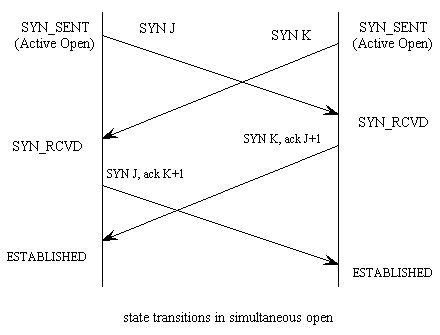

Simultaneous

Simultaneous Open

- 双方同时发送syn报文

- It’s possible for two applications to send a SYN to each other to start a TCP connection, although the possibility is small, because both sides have to know which port on the other side to send to. This process is called “Simultaneous Open“, or “simultaneous active open on both sides“.

- For example: An application at host A uses 7777 as the local port and connects to port 8888 on host B. At the same time, an application at host B uses 8888 as the local port and connects to port 7777 on host A. This is “Simultaneous Open”.

- TCP is specially designed to deal with “Simultaneous Open”, during which only one TCP connection is established, not two. The state transitions are shown in the following figure:

Simultaneous Close

- 双方同时发送 fin 报文

- It’s permitted in TCP for both sides to do “active close”, which is called “Simultaneous Close“. The state transitions are shown in the following figure:

故此 当然我的实现也可以正常处理这2种情况

bug

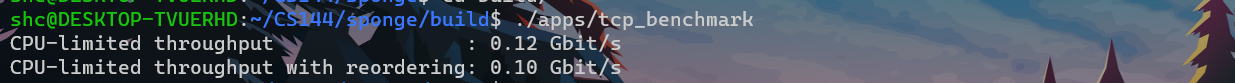

我写完第一次测试时 在模拟测试阶段,遇到了一些special case的bug,不过改起来也很愉快,写在case里。

第二次测试时,惊人的发现所有真实发送segment的测试都fail了,一度心态崩溃道心破碎,自己开server client 来回发送segment抓包还能正常工作,也看不到有什么异常的segment。明明没错啊但是还一个test都过不了,简直是给我搞得抓心挠肝。

后来研究了一下他的测试原理,应该是将我们tcpconnection收发的segment以及tcpconnection的类中所有的输出 都重定向到了测试文件a里。然后将这个a和输入的文件进行比较是否相等。由于重定向,我在code中的cout也被输入到了文件a中,故fail。

相等,则pass

不等,则fail

这谁能想到,遂将所有cout注释掉,测试,all passed,吾喜而笑,洗盏更酌,睡觉。

测试原理明天再整理.

Over !

Case

closing case. 整理完上面的Closing State , 这个就很显然了。

1 | cout<<"*********************start in CLOSING, send ack, time out**********************"<<endl; |

再举出一个client、server的例子

优化.. 后续

- Does the TCPConnection need any fancy data structures or algorithms?

- No, it really doesn’t. The heavy lifting is all done by the TCPSender and TCPReceiver that you’ve already implemented.

- The work here is really just about wiring everything up, and dealing with some lingering connection-wide subtleties that can’t easily be factored in to the sender and receiver.

- The hardest part will be deciding when to fully terminate a TCPConnection and declare it no longer active.